10. Representing Marginal Valuation#

Authors: Lars Peter Hansen and Thomas J. Sargent

Date: November 2024 \(\newcommand{\eqdef}{\stackrel{\text{def}}{=}}\)

10.1. Introduction#

Partial derivatives of value functions appear in first-order conditions of Markov decision problems and measure marginal valuations. Since controls depend on partial derivatives of value functions, they also feature prominently in max-min formulations of robust control problems. They can also be used to measure losses from suboptimal choices. They are pertinent for both individual decision problems and social evaluations. Robust control theories have been used by [Hansen et al., 1999] to assess impacts of uncertainty on investment and equilibrium prices and quantities, by [Alvarez and Jermann, 2004] to evaluate the welfare consequences of uncertainty, and by [Barnett et al., 2020] to characterize social costs of carbon emissions in the presence of uncertainty about damages inflicted by climate changes. Social costs of global warming are important contributors to social costs of carbon emissions that are often inferred from marginal impacts of fossil fuel emissions on climate indicators. These depend on uncertain damages to future economic opportunities.

This chapter imports insights about stochastic nonlinear impulse response functions that come from asset pricing methods for valuing uncertain cash flows. Our analysis relies on decompositions of partial derivatives that allow researchers to partition quantitative findings into contributing forces. This approach contributes to a broader agenda that aims to improve uncertainty quantification methods. Dynamic stochastic equilibrium models often involve several moving parts. Decomposing sources of implications from such models “opens black boxes” and helps provide plausible explanations of model outcomes. Our asset-pricing perspective allows us to think in terms of state-dependent discounting and stochastic flows reminiscent of stochastic payoffs to be valued. Moreover, this perspective allows us to relate stochastic flow to forcing functions that drive outcomes a dynamic stochastic equilibrium model.

10.2. Discrete time#

We start with Markov process

with \(n\) components of \(X,\) \(Y\) a scalar, and \(W\) is \(k\) dimensional. We also want to study the associated variational processes

We use stochastic impulse responses to provide an “asset pricing” representation of partial derivatives of a value function with respect to one of the components of \(X_0\). Consider a value function that satisfies:

This value function might measure outcomes from using some arbitrary collection of decision rules, not necessarily socially optimal ones. To do a local policy analysis, we’ll want to compute marginal valuations for such a value function.

Differentiate both sides of this (10.3) with respect to \(X_t\) and \(Y_t\) and form dot products with appropriate variational counterparts:

View equation (10.4) as a stochastic difference equation and solve it forward for \(\frac{\partial V}{\partial x}(X_t) \cdot \Lambda_t + \Delta_t:\)

Initialize \(\Lambda_0 = \mathrm{e}_i,\) where \(\mathrm{e}_i\) is a coordinate vector with a one in position \(i\) and \(\Delta_0 = 0.\) This lets us represent the partial derivative of the value function as:

which resembles an asset pricing formula in which

acts as a vector stochastic discount factor process and the marginal contribution

acts as a vector stochastic cash flow process. The stochastic impulse response tells the marginal response of state vector at date \(t\) to changes in the \(i^{th}\) state vector component at date zero, while the vector of marginal cash flows at date \(t\) measures impacts on utility of a marginal change in the date \(t\) state vector.

Remark 10.1

Sometimes it is convenient to apply summation by parts:

Substituting into (10.5) gives:

10.3. Continuous time#

A continuous-time formulation allows us to distinguish small shocks (Brownian increments) from large shocks (Poisson jumps). Let’s consider a continuous-time specification with Brown motion shocks, i.e., diffusion dynamics. We can treat jumps as terminal conditions for which we impose continuation values conditioned on a jump taking place. The possibility of a jump contributes to the value function. After developing this approach, we shall extend it to we include valuations that reflect concerns about model misspecifications, i.e., “robust valuations.”

10.3.1. Diffusion dynamics#

We start with a Markov diffusion that governs state dynamics

that need not be the outcome of an optimization problem.

Using the variational process construction in the previous chapter, recall that

With the appropriate stacking, the drift for the composite process \((X,\Lambda)\) is:

and the composite matrix coefficient on \(dW_t\) is given by

Similarly, \(\Delta\) is the scalar variational process associated with \(Y\) with evolution

10.3.2. An initial representation of a partial derivative#

Consider the evaluation of discounted utility where the instantaneous contribution is \(U(x)\) where \(x\) is the realization of a state vector \(X_t\). The function \(U\) satisfies a Feynman-Kac (FK) equation:

As in the discrete-time example, we want to represent

as an expected discounted value of a marginal impulse response of future \(X_t\) to a marginal change of the \(i^{th}\) coordinate of \(x.\)

By differentiating Feynman-Kac equation (10.8) with respect to each coordinate, we obtain a vector of equations, one for each state variable. We then form the dot product of this vector system with respect to \(m\) to obtain a scalar equation system that is of particular interest. The resulting equation is a Feynman-Kac equation for the scalar function:

as established in the Appendix. Given that the equation to be solved involves both \(\lambda\) and \(x\), this equation uses the diffusion dynamics for the joint process \((X,\Lambda)\).

The solution to this Feynman-Kac equation takes the form of a discounted expected value:

By initializing the state vector \(\Lambda_0\) to be a coordinate vector of zeros in all entries except \(i\) and \(\Lambda_0 = 0\), we obtain the formula we want, which gives the partial derivative as a discounted present value using \(\delta\) as the discount rate. The contribution, \(\Lambda_{t},\) is the marginal response of the date \(t\) state vector to marginal change in the \(i^{th}\) component of the state vector at date zero. The marginal change in the date \(t\) state vector induces marginal reward at date \(t\):

which provides us with a useful interpretation as an asset price. The process \(\Lambda\) gives a vector counterpart to a stochastic discount factor process and \(\delta \frac {\partial U}{\partial x} (X_{t}) + \Delta_t\) gives the counterpart to a cash flow to be valued.

One application of representation (10.9) computes the discounted impulse response:

for \(t \ge 0\) and for \(j=1,2,...,n\) along with

for \(t \ge 0\) as an intertemporal, additive decomposition of the marginal valuation of one of the state variables as determined by an initialization of \(\Lambda_0.\)

Remark 10.2

Representations similar to (10.7) appear in the sensitivity analyses of options prices. See [Fournie et al., 1999].

10.3.3. Robustness#

We next consider a general class of drift distortions that can help us study model misspecification concerns. We initially explore the consequences of exogenously-specified drift distortion. After that, we show how such a distortion can emerge endogenously as a decision-maker’s response to concerns about model misspecifications.

For diffusions, we entertain distortions to the Brownian increment. Instead \(W\) being a multivariate Brownian motion, we allow it to have a drift \(H\) under a change in the probability distribution. We index the alternative probability specifications with their corresponding drift processes \(H\). Locally,

where \(W^H\) is a Brownian motion under the \(H\) probability. Given that both the distribution parameterized by \(H\) and the baseline distribution for the increment are normals with an identity matrix as the local covariance matrix, the local measure of relative entropy is given by the quadratic term:

See [James, 1992], [Anderson et al., 2003], and [Hansen et al., 2006] for further discussions.

We pose the decision problem as a Stackelberg game solved from a date zero perspective. The maximizing decision maker takes as given, a drift distortion process, \(\{H_t : t \ge 0 \},\) when optimizing by choice of a decision process \(\{D_t : t \ge 0\}\). The minimizing decision maker then optimizes by choice \(H\). This solution is posed in the space of stochastic processes.

We analyze this problem following on insights in [Fleming and Souganidis, 1989]. They justify a value function, \(V,\) that solves:

Notice that this value function is constructed by solving a recursive version of the zero-sum game.

One of the conditions they impose is called the Bellman-Isaacs equation requiring the exchanging orders of \(\min\) and

\(\max\) does not alter the value function for the recursive game. In effect, [Fleming and Souganidis, 1989] show that coupled dynamic programs characterize the two-player, zero-sum game that interests us, as well as some other two-player, zero-sum games.

Following [Hansen et al., 2006], this approach gives us a recipe for constructing a minimizing drift distortion process \(\{H_t : t \ge 0\}\). The minimizing \(h\) in (10.10) expressed as a function of \(x\) satisfies:

where \(d^*\) is maximizing \(d.\) Define the drift distortion:

Form

and

for the initialization \({\overline X}_0 = X_0\). Given this initial condition, by design \(X_t = {\overline X}_t\) for \(t \ge 0\) is a maximizer for \(\{ D_t : t \ge 0\}.\) We constructed the process \(\{ {\overline X}_t : t \ge 0 \}\) for the sole purpose of representing the minimizing drift distortion.

The maximizing decision maker takes \(\{ {\overline X}_t : t \ge 0\}\) as exogenous when optimizing. We write the stochastic dynamics for the original state vector as:

The HJB equation for the maximizing decision maker (taking the minimizing solution as given) is:

By design:

for \(V\) that satisfies (10.10). Note that the HJB equation, as posed, allows for \({\bar x} \ne x\). Importantly, there is no contribution from differentiating \(H\) with respect to \(x\) since \(H\) only depends on the \(\bar{X}_t\) process. Confronted with the value function \({\overline V}(x,{\bar x})\) chooses \({\bar x} = x\) implying that

As a consequence:

HJB equation (10.10) implies a corresponding Feynman-Kac equation:

Differentiate this equation with respect to \(x\):

where \(\rm{mat}\) denotes a matrix formed by stacking the column arguments. This expression uses the first-order conditions for \(h^*\) and an “Envelope Theorem” to cancel some terms. In this way, we can represent the partial derivative vector of the value function as:

We use the \({\widetilde {\mathbb E}}\) notation because we are using impulse responses computed under the uncertainty adjusted state evolution implied by imposing \(\{ H_t^* : t \ge 0 \}\).

Remark 10.3

While we demonstrated that we can treat a drift distortion as exogenous to the original state dynamics, for some applications we will want to view it as a change in the endogenous dynamics that are reflected (10.11).

10.3.4. Allowing IES to differ from unity#

Let \(\rho\) be the inverse of the intertemporal elasticity of substitution for a recursive utility specification. The utility recursion is now:

where \({\mu}_{v,t}\) is the local mean of \({V}(X) + Y\) with the robust adjustment discussed previous subsection. Compute:

With this computation, we modify the previous formulas by replacing the subjective discount factor, \(\exp(-\delta t),\) with

Thus the instantaneous discount rate is now state dependent and depends on the both how the current utility compares to the continuation value and on whether \(\rho\) is greater or less than one. When the current utility exceeds the continuation value, the discount rate is scaled up when \(\rho\) exceeds one, and it is scaled down when \(\rho\) is less than one.

We replace the instantaneous contribution to the flow term, \(\delta \frac \partial {\partial x} U[X_t,d(X_t)]\) with:

Combining these contributions gives:

10.3.5. Jumps#

We study a pre-jump functional equation in which jump serves as a continuation value. We allow multiple types of jumps, each with its own state-dependent intensity. We denote the intensity of a jump of type \(\ell\) by \(\mathcal{J}^\ell(x)\); a corresponding continuation value after a jump of type \(\ell\) has occurred is \(V^\ell(x)+y\). In applications, we’ll compute post-jump continuation value \(V^\ell\), as components of a complete model solution. To simplify the notation, we impose that \(\rho = 1,\) but it is straightforward to incorporate the \(\rho \ne 1\) extension we discussed in the previous subsection.

As in [Anderson et al., 2003], an HJB equation that adds concerns about robustness to misspecifications of jump intensities includes a robust adjustment to the intensities. The minimizing objective and constraints are separable across jumps. Thus we solve:

for \(\ell = 1,2, ..., L\), where \(g^\ell \ge 0\) alters the intensity of type \(\ell,\) and the term

measures the relative entropy of jump intensity specifications.

The minimizing \(g^{\ell}\) is

with a minimized objective given by

The minimized objective

is increasing and concave in the value function difference: \(V^\ell - V\). A gradient inequality for a concave function implies that

Remark 10.4

The deduce the formula for relative entropy and jumps, consider a discrete-time approximation whereby the probability of a jump of type \(\ell\) over an interval of time \(\epsilon\) is (approximately ) \(\epsilon{\mathcal J}^\ell g^\ell\) and probability of not jumping \(1 - \epsilon{\mathcal J}^\ell g^\ell\) where \(g^\ell = 1\) at the baseline probability specification. The approximation becomes good when \(\epsilon\) declines to zero. The corresponding (approximate) relative entropy is

Differentiate this expression with respect to \(\epsilon\) to obtain:

In what follows we will also be interested in the partial derivative of the minimized function given in (10.13) with respect to the state vector:

where \(g^{\ell*}\) is the minimizer used to alter the jump intensity.

When constructing the HJB equation, we continue to include the diffusion dynamics and now incorporate the \(L\) possible jumps. The usual term:

is replaced by

as an adjustment for robustness in the jump intensities.

The resulting HJB equation is:

We again construct a Feynman-Kac equation by substituting in \(h^*(x)\). Applying an Envelope Theorem to first-order conditions for minimization tells us that \(h^*(x)\) should not contribute to the derivatives of the value function. This leads us to focus on:

It is revealing to rewrite equation (10.14) as:

Notice how distorted intensities act like endogenous discount factors in this equation. The last two terms add flow contributions to pertinent Feynman-Kac equations via dot products with respect to \(m\). It is significant that these terms do not include derivatives of \(g^{\ell*}\) with respect to \(x\).

For simulating our asset pricing representation of the partial derivatives of the value function, the discounting term becomes state dependent in order to adjust for the jump probabilitites:

In addition, three flow terms are discounted:

Its revealing to think of right side as providing three different sources of the marginal values. The contributions of \(V^{\ell} - V\) and \(\frac {\partial V^\ell}{\partial x}\) are to be expected because they help to quantify the consequences of potential jumps. We may further decompose terms ii) and iii) by the jump type \(\ell\) to assess which jumps are the most important contributors to the marginal valuations. Analogous representations can be derived for the \(\frac {\partial V^\ell}{\partial x}\)’s conditioned on each of the jumps occurring.

Notice that term ii) of formula (10.15) include derivatives of the jump intensity with respect to the state of interest. In some examples, the jump intensities are constant or depend only on an exogenous state. In this case the second term drops out and only the first and third terms remain.

Simulation-based methods can be used to compute these value contributions. They should be conducted under implied worst-case diffusion dynamics. With multiple jump components, we decompose contributions to the marginal utility by jump types \(\ell\).

10.3.6. Climate change example#

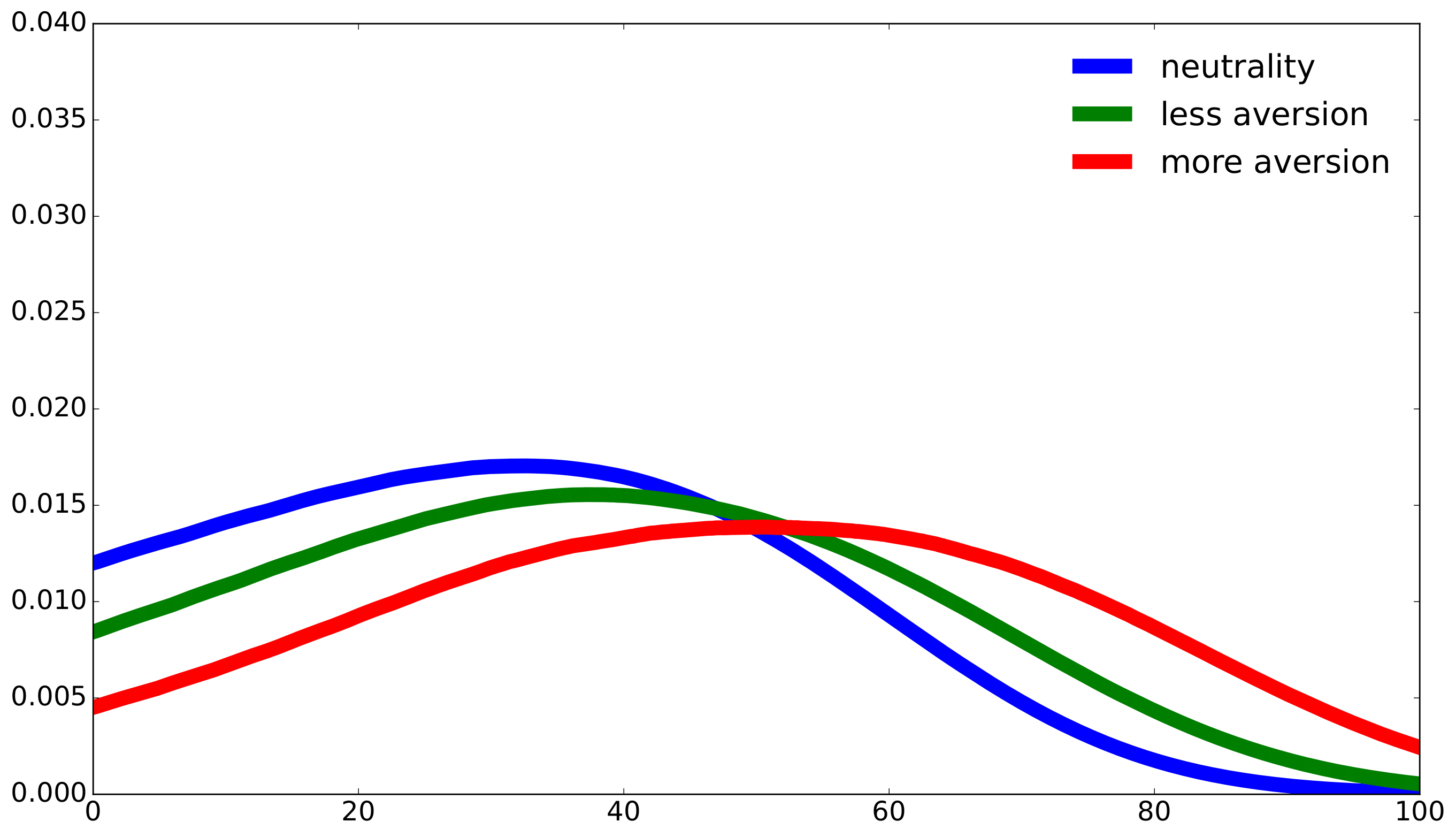

[Barnett et al., 2024] use representations (10.15) to decompose their model-based measure of the social cost of climate change and the social value of research and development. In their analysis, there are two types of Poisson jumps. One is the discovery of a new technology and the other is recognization of how curved the damage function is for more extreme changes in temperature. The magnitude of damage curvature is revealed by a jump triggered by a temperature anomaly between 1.5 and 2 degrees celsius. [Barnett et al., 2024] allow for twenty different damage curves. While there are twenty one possible jump types, we group them into damage jumps (one through twenty) and a technology jump (twenty one). [Barnett et al., 2024] display the quantitative importance of a technology jump and a damage jump in contributing to the social value of research and development. We report analogous findings for the social cost of climate change measured as the negative of the marginal value of temperature. We take the negative of the marginal value of climate change because warming induces a social cost (a negative benefit).

capital |

knowledge stock |

|

|---|---|---|

more aversion (\(\xi=.05\)) |

-0.184 |

-0.008 |

less aversion (\(\xi=.1\)) |

-0.096 |

-0.003 |

Table 1: Drift distortions for capital stock evolution and the knowledge stock evolution at the initial time period.

flow i |

flow ii |

flow iii |

sum |

R & D investment |

capital investment |

|

|---|---|---|---|---|---|---|

more aversion |

15.0 (16 %) |

59.9 (64 %) |

18.7 (20 %) |

93.6 |

0.0284 |

0.750 |

less aversion |

9.3 (14 %) |

41.0 (63 %) |

14.9 (23 %) |

65.3 |

0.0151 |

0.764 |

neutrality |

5.6 (12 %) |

31.6 (68 %) |

9.3 (20 %) |

46.5 |

0.0075 |

0.773 |

Table 2: Three flow contributions to the social value of R&D for the technology jump only model. For “more aversion”, \(\xi = .05\); and for “less aversion”, \(\xi = .1\). Each flow contribution has been divided by the marginal utility of (damaged) consumption. Both investments are expressed as a fraction of output.

\(\xi\) |

SVRD |

R&D investment / output |

|---|---|---|

\(\infty\) |

44.6 |

.0075 |

.10 |

63.4 |

.0151 |

.05 |

87.9 |

.0284 |

.01 |

88.3 |

.0300 |

.005 |

44.4 |

.0072 |

Table 3: Social value of R&D (technology jump only) as a function of the robustness parameter, \(\xi\).

\(\xi\) |

SCGW |

emissions |

|---|---|---|

\(\infty\) |

59.3 |

9.28 |

.10 |

92.8 |

9.02 |

.05 |

151.1 |

8.66 |

.01 |

413.6 |

7.30 |

.005 |

451.1 |

6.87 |

Table 4: Social cost of global warming (technology jump only) as a function of the robustness parameter, \(\xi\).

Fig. 10.1 Density for the time of the technology jump.#